When it comes to building a mind from scratch, I think I am closing in on a rough blueprint (that doesn't require any souls or proto-phenomenal properties).

I believe an economical solution to most of the big problems in philosophy of mind emerges when one embraces:

- A quotational (or constitutive) account of phenomenal concepts,

- a functional account of phenomenal types,

- an identity account of phenomenal tokens, and

- a predictive processing perspective on intelligent brain function.

The Language of Thought

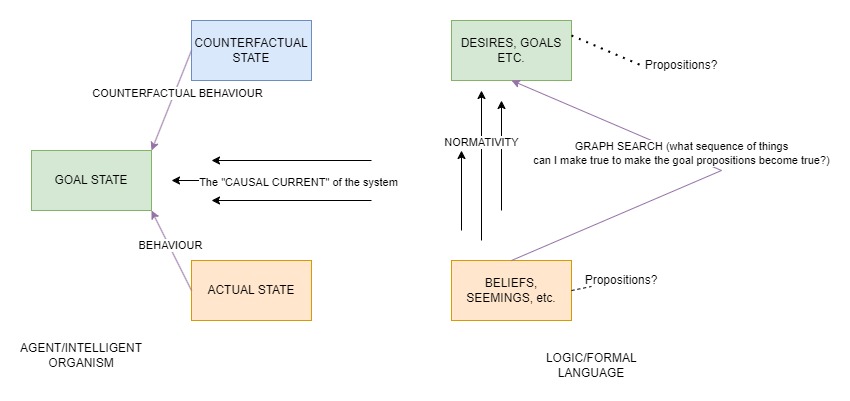

I think highly intelligent goal-chasing systems have language-like functional characteristics. What I call the 'causal current' between possible physical states of these systems is functionally analogous to the normative relationship between believed propositions and goal propositions.

Phenomenal Concepts

I advocate a quotational account of direct phenomenal concepts. For a similar account, see Balog, 2012. One difference from Balog's account is that I also actively espouse a functional, dispositional, and relational account of qualia.

I'm a big fan of Balog's rather literal interpretation of the term 'quotational', as I think the analogy between our language of thought and quotation in natural language is a strong one.

Higher-Order Thought Theory

I advocate a quotational higher-order thought theory (see Coleman, 2015 and Picciuto, 2011).

Predictive Processing Theory

I think our higher-order thoughts quote the states that they predict and detect. This gives us an epistemic advantage when monitoring the quality of our world model.

Reflexive Beliefs

I think the higher-order thoughts that quote our phenomenal states are reflexive, which gives us another epistemic advantage when monitoring the quality of our world model. For an argument to that effect, see Douglas Campbell's A Theory of Consciousness.

Other Interests

Metaphilosophy

I think my theories of mentality may shed light on folk philosophical intuitions (that academics are no less subject to!). The idea is essentially that false or meaningless metaphysical assumptions might be required for an agent to intelligently model the world to any extent.

Cognitive Architectures

Especially ACT-R and SOAR, as well as my own.

Symbolic and neurosymbolic Artificial Intelligence

Cellular automata

Including neural cellular automata and graph cellular automata.

Neuroscience

Spiking models, Hebbian (and anti-Hebbian, and reward-modulated Hebbian...) learning, pruning algorithms, neural Darwinism, coincidence detection, etc...

Artificial Life

Check out Léo Caussan's The Bibites for a good example. I am working on my own.